In big data systems like Hadoop, you often have many jobs that need to be run in a particular order or on a schedule.

For example: Ingest Data → Clean Data → Run Analytics → Send Results

You can’t just run them manually every time or hope they happen in the right order. That’s where Oozie come into the picture. Apache Oozie is a workflow scheduler system specifically designed for managing and running Hadoop jobs. It allows you to define a sequence of actions (like MapReduce, Pig, Shell, Java programs) that run in a specific order with conditional logic and time triggers. Think of Oozie like a calendar and traffic controller for big data tasks in a Hadoop system. It helps you say “Run this job every day at 3 AM” or “Run job B only after job A finishes successfully.” It’s the system that makes sure all your big data jobs run in the right order, at the right time, and don’t break the system.

A fun fact about the name Oozie is that it’s actually a Hindi word meaning “elephant rider” 🐘, a fitting name since Hadoop’s logo is an elephant, and Oozie controls Hadoop jobs!

When working with Oozie, there are three main building blocks you’ll need to understand: workflow.xml, coordinator.xml and bundle.xml.

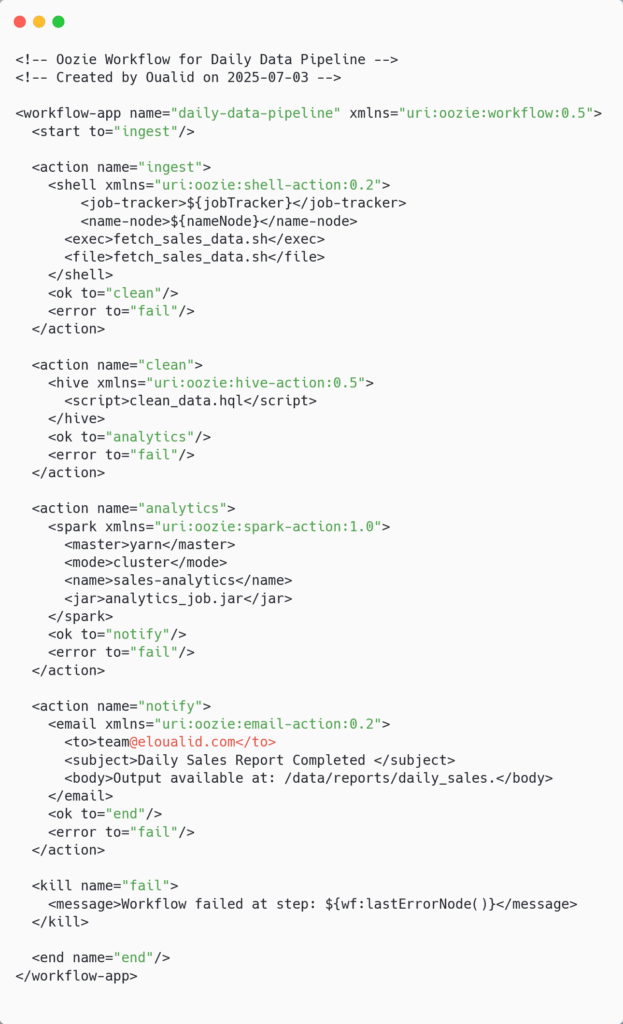

- Workflow:

A Directed Acyclic Graph of actions (DAG of actions), fancy term for something that flows forward only and never loops back. Oozie makes sure these steps run in order, and it knows what to do if something goes wrong, It has a defined transitions (ok, error) and control nodes (start, end, kill, fork, join) that make all that possible. let’s take the workflow.xml file for the example above:

📄workflow.xml

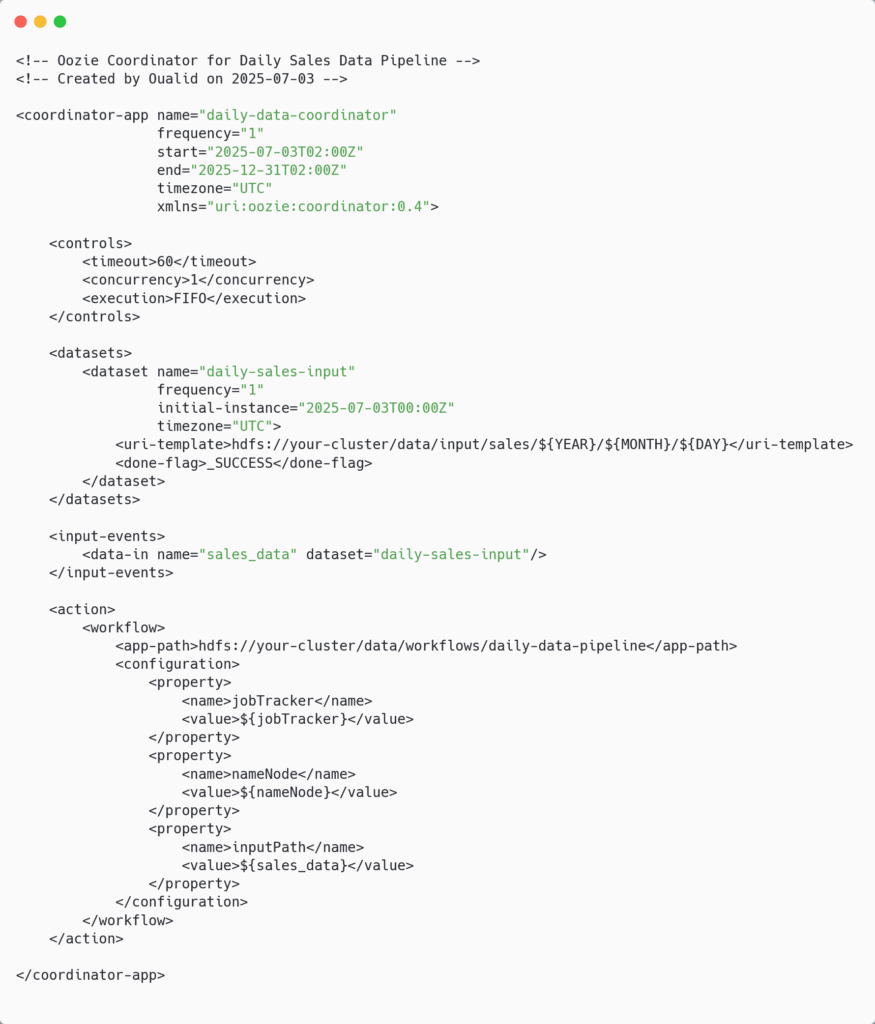

- Coordinator:

Schedules workflows based on time, data availability, or external events. It’s a scheduler with intelligence. It doesn’t just say, “Run every day at 6 AM”. It can also say: “Run this workflow when the data is ready” or “Wait until this file shows up in HDFS before starting”. The Coodinator file for our previous workflow setup will look something like this:

📄coordinator.xml

- Bundle:

he groups multiple coordinators for complex pipeline management. It’s like a project folder that groups several coordinators together. So if we have for example: a daily job, a weekly cleanup, and a monthly report we can package them all into one bundle and manage them together, like deploying one project instead of three separate ones.

Even though newer workflow tools like Airflow have become quite popular, Oozie remain a reliable option for teams already working with Hadoop. One big reason is its native integration with core Hadoop technologies like YARN and HDFS (no extra setup or plugins needed). Another thing that keeps Oozie relevant is its simplicity, relying on simple XML and command-line tools without needing a Python runtime or a database backend. Its XML configuration makes workflows more deterministic and predictable. This makes your workflows easier to manage and more predictable, especially when stability is a priority.

In the next post, I’ll walk you through some of Oozie’s more advanced capabilitie. For example: setting up SLAs to keep your critical jobs on schedule, running workflows only when the right data lands, and building in smart retry logic for when things occasionally go wrong.